一、首先准备我们需要的库

1、requests//用来请求网页

2、json//用来解析json数据,用于把json数据转换为字典

3、re//利用正则对字符串查找

3、pyquery//利用css查找

4、pymongo//存取pymongo

5、MySQLdb//存取mysql

创建一个配置文件config.py用于存储数据库的密码

MYSQL_HOST='localhost'

MYSQL_USER='root'

MYSQL_PASSWORD=''

MYSQL_DB='test'

MOGO_URL='localhost'

MOGO_DB='nuomi'

MOGO_TABLE='product'

MOGO_DB_M='meituan'

MOGO_TABLE_M='product'

MOGO_DB_D='dazhong'

MOGO_TABLE_D='product'

二、百度糯米

1、获取全国的url

def get_city():

url='https://www.nuomi.com/pcindex/main/changecity'

response=requests.get(url)

response.encoding='utf-8'

doc=pq(response.text)

items=doc('.city-list .cities li').items()

for item in items:

product={

'city':item.find('a').text(),

'url':'https:'+item.find('a').attr('href')

}

get_pase(product['url'],keyword)

2、通过关键字搜索商品

def get_pase(url,keyword):

head={

'k':keyword,

}

urls=url+'/search?'+urlencode(head)

response=requests.get(urls)

response.encoding = 'utf-8'

req=re.findall('noresult-tip',response.text)

if req:

print('抱歉,没有找到你搜索的内容')

else:

req=r'<a href="(.*?)" target="_blank"><img src=".*?" class="shop-infoo-list-item-img" /></a>'

url_req=re.findall(req,response.text)

for i in url_req:

url_pase='https:'+i

get_pase_url(url_pase)

req=r'<a href="(.*?)" .*? class="ui-pager-normal" .*?</a>'

url_next=re.findall(req,response.text)

for i in url_next:

url_pases=url+i

get_pase_url(url_pases)

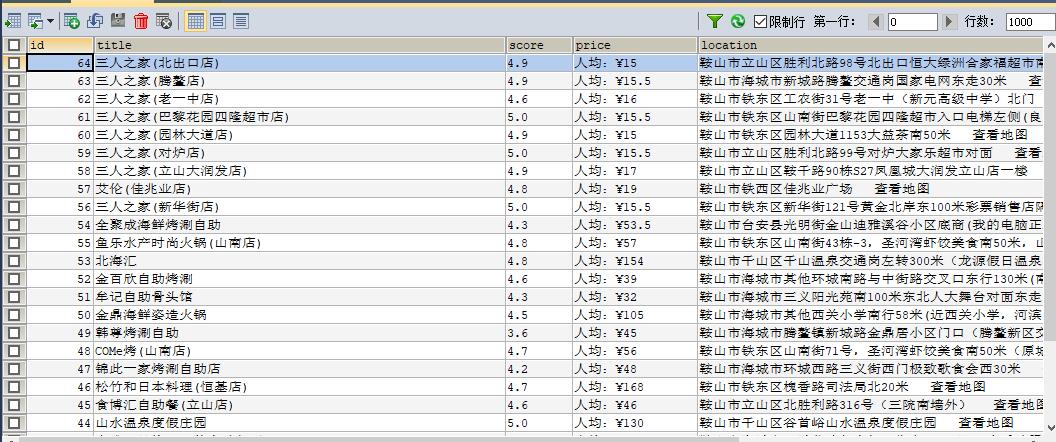

3、获取商品页的商品信息

def get_pase_url(url):

response=requests.get(url)

response.encoding = 'utf-8'

doc=pq(response.text)

product={

'title':doc('.shop-box .shop-title').text(),

'score':doc('body > div.main-container > div.shop-box > p > span.score').text(),

'price':doc('.shop-info .price').text(),

'location':doc('.item .detail-shop-address').text(),

'phone':doc('body > div.main-container > div.shop-box > ul > li:nth-child(2) > p').text(),

'time':doc('body > div.main-container > div.shop-box > ul > li:nth-child(3) > p').text(),

'tuijian':doc('body > div.main-container > div.shop-box > ul > li:nth-child(4) > p').text()

}

print(product)

save_mysql(product)

#save_mongodb(product)

4、保存到数据库中

def save_mysql(product):

conn=MySQLdb.connect(MYSQL_HOST,MYSQL_USER,MYSQL_PASSWORD,MYSQL_DB,charset='utf8')

cursor = conn.cursor()

cursor.execute("insert into nuomi(title,score,price,location,phone,time,tuijian) values('{}','{}','{}','{}','{}','{}','{}')".format(product['title'] , product['score'] , product['price'] , product['location'] , product['phone'] ,product['time'] , product['tuijian']))

print('成功存入数据库',product)

def save_mongodb(result):

client=pymongo.MongoClient(MOGO_URL)

db=client[MOGO_DB]

try:

if db[MOGO_TABLE].insert(result):

print('保存成功',result)

except Exception:

print('保存失败',result)

二、美团

1、获取全国的url

def get_city():

url='http://www.meituan.com/changecity/'

response=requests.get(url)

response.encoding='utf-8'

doc=pq(response.text)

items=doc('.city-area .cities .city').items()

for item in items:

product={

'url':'http:'+item.attr('href'),

'city':item.text()

}

get_url_number(product['url'])

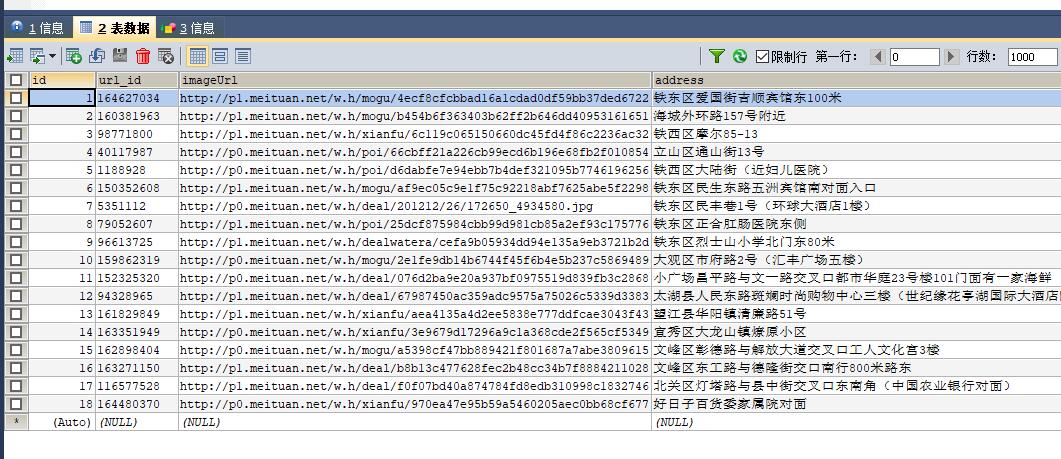

2、通过关键字搜索商品

def get_url_number(url):

try:

response=requests.get(url)

req=r'{"currentCity":{"id":(.*?),"name":".*?","pinyin":'

number_url=re.findall(req,response.text)

for code in range(0,500,32):

url='http://apimobile.meituan.com/group/v4/poi/pcsearch/{}?limit=32&offset={}&q={}'.format(number_url[0],code,keyword)

response=requests.get(url)

data=json.loads(response.text)

imageUrl=data['data']['searchResult'][0]['imageUrl']

address=data['data']['searchResult'][0]['address']

lowestprice=data['data']['searchResult'][0]['lowestprice']

title=data['data']['searchResult'][0]['title']

url_id=data['data']['searchResult'][0]['id']

product={

'url_id':url_id,

'imageUrl':imageUrl,

'address':address,

'lowestprice':lowestprice,

'title':title

}

save_mysql(product)

except Exception:

return None

3、保存到数据库中

def save_mysql(product):

conn=MySQLdb.connect(MYSQL_HOST,MYSQL_USER,MYSQL_PASSWORD,MYSQL_DB,charset='utf8')

cursor = conn.cursor()

cursor.execute("insert into meituan(url_id,imageUrl,address,lowestprice,title) values('{}','{}','{}','{}','{}')".format(product['url_id'], product['imageUrl'], product['address'], product['lowestprice'], product['title']))

print('成功存入数据库',product)

def save_mongodb(result):

client=pymongo.MongoClient(MOGO_URL)

db=client[MOGO_DB_M]

try:

if db[MOGO_TABLE_M].insert(result):

print('保存成功',result)

except Exception:

print('保存失败',result)

三、大众点评

1、获取全国的url

def get_url_city_id():

url = 'https://www.dianping.com/ajax/citylist/getAllDomesticCity'

headers = {

'User-Agent': 'Mozilla/5.0(Windows NT 10.0;Win64;x64)AppleWebKit/537.36(KHTML,likeGecko)Chrome/64.0.3282.186Safari/537.36' ,

}

response = requests.get(url , headers=headers)

data=json.loads(response.text)

for i in range(1,35):

url_data=data['cityMap'][str(i)]

for item in url_data:

product={

'cityName':item['cityName'],

'cityId':item['cityId'],

'cityEnName':item['cityEnName']

}

get_url_keyword(product)

break

2、通过关键字搜索商品

def get_url_keyword(product):

urls = 'https://www.dianping.com/search/keyword/{}/0_%{}'.format(product['cityId'], keyword)

headers = {

'User-Agent': 'Mozilla/5.0(Windows NT 10.0;Win64;x64)AppleWebKit/537.36(KHTML,likeGecko)Chrome/64.0.3282.186Safari/537.36' ,

}

response = requests.get(urls, headers=headers)

req=r'data-hippo-type="shop" title=".*?" target="_blank" href="(.*?)"'

data=re.findall(req,response.text)

for url in data:

get_url_data(url)

3、获取商品页的商品信息

def get_url_data(url):

headers= {

'User-Agent': 'Mozilla/5.0(Windows NT 10.0;Win64;x64)AppleWebKit/537.36(KHTML,likeGecko)Chrome/64.0.3282.186Safari/537.36' ,

'Host': 'www.dianping.com',

'Pragma': 'no - cache',

'Upgrade - Insecure - Requests': '1'

}

response = requests.get(url,headers=headers)

doc=pq(response.text)

title=doc('#basic-info > h1').text().replace('\n','').replace('\xa0','')

avgPriceTitle=doc('#avgPriceTitle').text()

taste=doc('#comment_score > span:nth-of-type(1)').text()

Environmental=doc('#comment_score > span:nth-of-type(2)').text()

service=doc('#comment_score > span:nth-of-type(3)').text()

street_address=doc('#basic-info > div.expand-info.address > span.item').text()

tel=doc('#basic-info > p > span.item').text()

info_name=doc('#basic-info > div.promosearch-wrapper > p > span').text()

time=doc('#basic-info > div.other.J-other > p:nth-of-type(1) > span.item').text()

product={

'title':title,

'avgPriceTitle':avgPriceTitle,

'taste': taste ,

'Environmental':Environmental,

'service': service ,

'street_address':street_address,

'tel': tel ,

'info_name':info_name,

'time':time

}

save_mysql(product)

3、保存到数据库中

def save_mysql(product):

conn=MySQLdb.connect(MYSQL_HOST,MYSQL_USER,MYSQL_PASSWORD,MYSQL_DB,charset='utf8')

cursor=conn.cursor()

cursor.execute("insert into dazhong(title,avgPriceTitle,taste,Environmental,service,street_address,tel,info_name,time) values('{}','{}','{}','{}','{}','{}','{}','{}','{}')".format(product['title'],product['avgPriceTitle'],product['taste'],product['Environmental'],product['service'],product['street_address'],product['tel'],product['info_name'],product['time']))

print('成功存入数据库' , product)

def save_mogodb(product):

client=pymongo.MongoClient(MOGO_URL)

db=client[MOGO_DB_D]

try:

if db[MOGO_TABLE_D].insert(product):

print('保存成功',product)

except Exception:

print('保存失败',product)

详细的描述可以访问我的Github TomorrowLi 里面有我的源码